Do you have the need to take full-page screenshots from a ton of URL’s? Well you are in luck. I have tried many methods to get the best outcome that correctly captures web pages (including javascript-based pages that cause problems for many automated capturing methods). I also take care of ‘lazy loading’ web pages that don’t load the contents until you scroll down; I simulate the scrolling down to force the loading of all content before I screen grab.

Before proceeding: If you just need to capture a few full screenshots (including the scrollable content), just use Google Chrome’s Developer Tools –> Toggle the Device Toolbar –> Capture full size screen shot. It will have similar results as what the rest of this blog post discusses, except I automate this process for long lists of URLs. This website describes this Developer Tools process better.

Follow these steps to take full size screen shots (full vertical) using a list of URLs and save as PNG files:

- Download Node JS and install it: https://nodejs.org/en/download/

- Install Puppeteer by running this in your shell:

npm i puppeteer - Download grab_url.js, grab_all.sh, and urls.txt and put them all in the same directory (or copy/paste the code further down this blog post)

- Open a shell and change to the directory where you put the files from step 3.

- Run:

bash grab_all.sh

grab_all.sh will go thru each URL provided in the urls.txt and pass it to the grab_url.js script to take a full screen shot of the page, and store it in a PNG file with a filename similar to 0001_Webpage_Title.png. I store the file in these formats so it’s in a nice alphanumeric ordered list (preserves order in the urls.txt) along with the actual web page’s title so you know what the file is referring to.

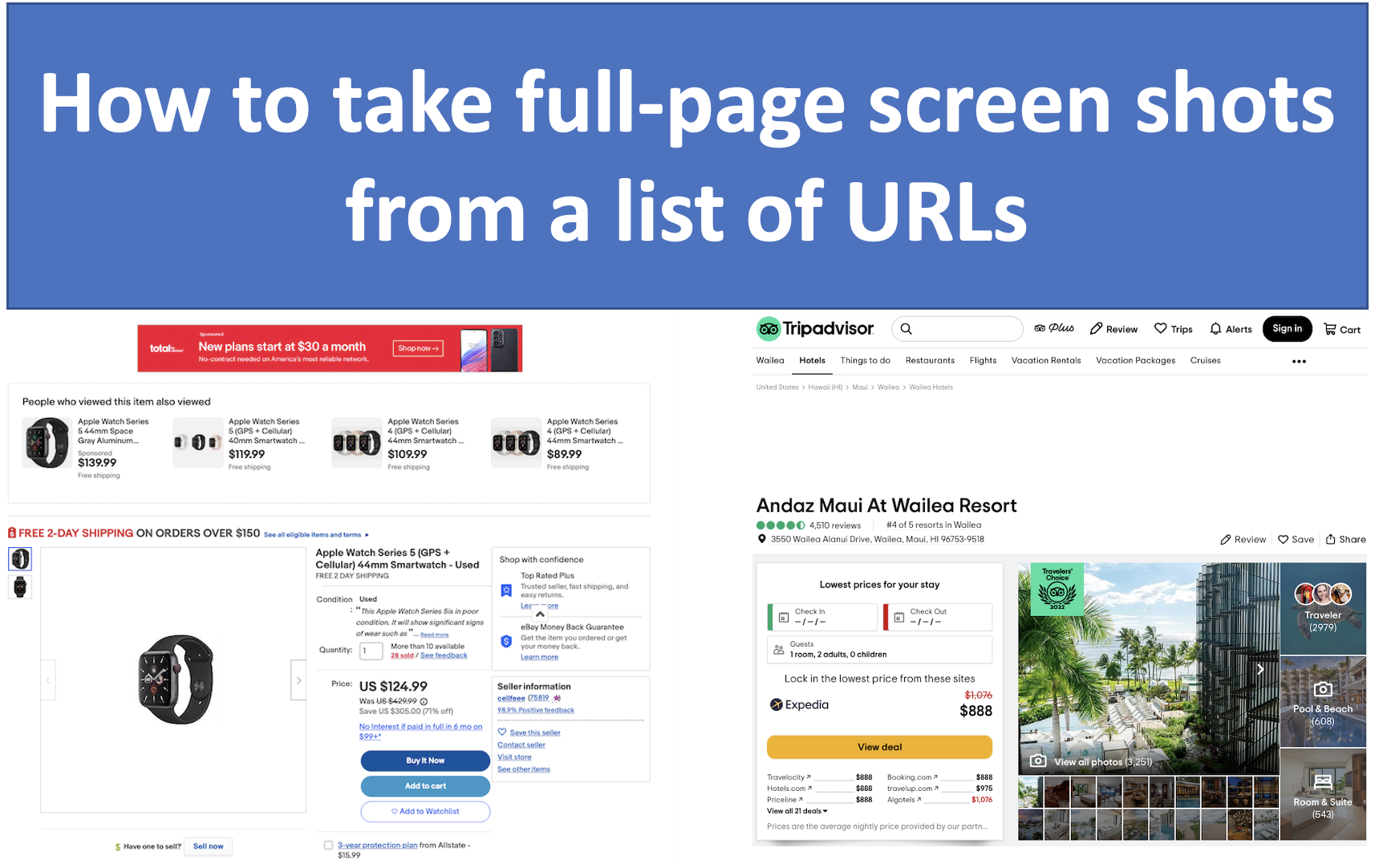

Sample output based on the sample URLs in the urls.txt file (it may look small, but zoom in and scroll up and down for the full view):

001_Apple_Watch_Series_5__GPS___Cellular__44mm_Smartwatch___Used___eBay.png

002_ANDAZ_MAUI_AT_WAILEA_RESORT___Updated_2022_Prices___Reviews__Hawaii_.png

003_Uncomplicate_Data_Storage__Forever___Data_Storage_Company___Pure_Storage.png

If you ever have a particular URL hanging the script, I’ve had luck by CTRL+C’ing the script and starting over. You shouldn’t encounter this too often but you may see failures to capture a web page due to a timeout, or some other error. If this happens, don’t worry because the bash script I wrote will check to see if we downloaded that URL already and if we did, I skip it. So, after the script completes running you should run it again to go retry those failed attempts.

Get a list of all URLs for a given website

If you want to screen grab the entire contents of an entire website, like purestorage.com, you can use this helpful online tool to get all the URLs for this website that they publish to search engines.

Simply go to this page: https://www.seowl.co/sitemap-extractor/

Type in the website you are interested in and append “/sitemap.xml” onto the end. For example for purestorage.com, you would enter “https://purestorage.com/sitemap.xml”. After entering this, click “Load Sitemap” and either copy/paste the results into the urls.txt file or export the CSV file and rename it to urls.txt.

You can then use that urls.txt on your next run of grab_all.sh (just make sure to put it in the same directory as the grab_all.sh file).

Note: Not all websites use sitemap.xml as their name. If you are not having luck, load the robots.txt file for the website you are interested in and search for “Sitemap:” and grab that URL. For example, in your browser go to “https://purestorage.com/robots.txt”; Once it loads, do a CMD+F (find) for “Sitemap:”. This is the ultimate source of truth for what the sitemap URL is that you use with the sitemap-extractor tool.

Source Code

#!/bin/bash

# Set default values

filenameOption="title"

urlFile="urls.txt"

x=1

outputSpecified=0

inputSpecified=0

# Parse parameters in the form of 'key=value'

for arg in "$@"

do

IFS='=' read -r key value <<< "$arg"

if [ "$key" == "output" ]; then

filenameOption="$value"

outputSpecified=1

elif [ "$key" == "input" ]; then

urlFile="$value"

inputSpecified=1

fi

done

# Get the script name without any preceding "./"

scriptName="${0#./}"

# Print instructions

if [ $inputSpecified -eq 0 ]; then

echo -e "\nNote: You can optionally specify a file containing URLs as an input option when running this script."

echo "Example: $scriptName input=your_urls.txt"

echo ""

fi

if [ $outputSpecified -eq 0 ]; then

echo -e "\nNote: You can optionally specify 'url' as an output option when running this script to save the filenames with the URL instead of the title."

echo "Example: $scriptName output=url"

echo ""

fi

echo -e "Parameter: input=$urlFile"

echo "Parameter: output=$filenameOption"

echo "Saving all screenshots in $(pwd)"

echo -e "\nRunning the script now...\n"

for line in $(cat $urlFile)

do

if [ $x -lt 10 ]; then

prefix="000${x}"

elif [ $x -lt 100 ]; then

prefix="00${x}"

elif [ $x -lt 1000 ]; then

prefix="0${x}"

else

prefix="${x}"

fi

filename=$(ls | grep "^${prefix}_")

if [ -n "$filename" ]; then

echo "Skipping index $x because file exists: $filename"

else

echo "$line $x"

node grab_url.js $x "$line" $filenameOption # pass the filename option to the Node.js script

fi

let "x += 1"

done

const puppeteer = require('puppeteer');

const args = process.argv.slice(2);

var index = args[0];

const url = args[1];

const filenameOption = args[2] || 'title'; // filenameOption will be 'title' if not specified

(async() => {

const browser = await puppeteer.launch({

headless: true,

args: [

'--headless',

'--disable-gpu'

]

});

console.log('index='+index+' url='+url);

const page = await browser.newPage();

await page.setDefaultNavigationTimeout(0);

await page.setViewport({ width: 1024, height: 768 });

try {

await page.goto(url, { waitUntil: 'networkidle0', timeout: 30000 });

} catch (error) {

console.error(`Failed to load URL: ${url}, Error: ${error}`);

await browser.close();

process.exit(1);

}

page.on('console', (msg) => console.log('', msg.text()));

await page.evaluate(`(${(async () => {

const innerHeight = Math.max( document.body.scrollHeight, document.body.offsetHeight, document.documentElement.clientHeight, document.documentElement.scrollHeight, document.documentElement.offsetHeight );

console.log('height='+innerHeight+' ');

await new Promise((resolve) => {

let totalHeight = 0;

let scrolled_times = 0;

const distance = 100;

const timer = setInterval(() => {

const scrollHeight = innerHeight; //document.body.scrollHeight;

scrolled_times++;

window.scrollBy(0, distance);

totalHeight += distance;

if (totalHeight >= scrollHeight) {

window.scrollTo(0, 0);

console.log("scrolled "+scrolled_times+" times");

clearInterval(timer);

resolve();

}

}, 100);

});

})})()`);

await page.waitForTimeout(1000);

var filenameBase = filenameOption === 'url' ? url : await page.title();

var filename = filenameBase.replace(/[^a-z0-9]/gmi, "_").replace(/\s+/g, "_");

while (index.length < 4) {

index = "0" + index;

}

// Ensure total filename length doesn't exceed 255 characters

const MAX_FILENAME_LENGTH = 255;

let reservedLength = index.length + 1 + 4; // 1 for "_" and 4 for ".png"

if (reservedLength + filename.length > MAX_FILENAME_LENGTH) {

filename = filename.substring(0, MAX_FILENAME_LENGTH - reservedLength);

}

filename = index + "_" + filename + '.png';

console.log(`filename=${filename}\n`);

try {

await page.screenshot({

path: filename,

fullPage: true

});

} catch (error) {

console.error(`Failed to take screenshot for URL: ${url}, Error: ${error}`);

await browser.close();

process.exit(1);

} finally {

await browser.close();

}

})();

urls.txt (this is just a sample URL list)

https://www.ebay.com/itm/255667761599

https://www.tripadvisor.com/Hotel_Review-g609129-d4459053-Reviews-Andaz_Maui_At_Wailea_Resort-Wailea_Maui_Hawaii.html

https://www.purestorage.com/

I have been around IT since I was in high school (running a customized BBS, and hacking) and am not the typical person that finds one area of interest at work; I have designed databases, automated IT processes, written code at the driver level and all the way up to the GUI level, ran an international software engineering team, started an e-commerce business that generated over $1M, ran a $5B product marketing team for one of the largest semiconductor players in the world, traveled as a sales engineer for the largest storage OEM in the world, researched and developed strategy for one of the top 5 enterprise storage providers, and traveled around the world helping various companies make investment decisions in startups. I also am extremely passionate about uncovering insights from any data set. I just like to have fun by making a notable difference, influencing others, and to work with smart people.

Hi – I would really love to use it but I get the error “grab_all.sh: line 6: node: command not found”

node etc. is installed

You may want to see if node is in your path. Try running “node” in your shell and see if it responds. If not, try “nodejs”. If nodejs works, just modify the bash script and change “node” to “nodejs”. If that’s not the issue, then maybe it’s not in your path. Mine installed in /usr/local/bin. So you can add that to your path or modify the bash script (grab_all.sh) to have the full path (e.g., /usr/local/bin/node). I included a link to a discussion on this.

https://stackoverflow.com/questions/13593902/node-command-not-found

Hey there! Thanks a lot for all this info. Script is running right now. But I wonder where are those screenshots being stored. I’d like to peek while it’s doing it.

Hi Aron, it should store all the output in the same directory that you ran the script in. I also added some output to the script so that it shows you the directory that the screenshots are saved in.

Oh my gosh, this is the best gift to the internet! Absolutely perfect, exactly what I was looking for.

Thanks—this is going to be a massive time saver!

MASSIVE!

Awesome work, Ryan!

Really love the tools which make my work A LOT easier. I know there are a lot of bulk screenshot tools on the market but apparently none of them are free, or even cheap.

I’m not a programmer or IT person and don’t understand how those lines of command work exactly, but is it possible for the name of the output file to be the URL? so instead of:

001_Apple_Watch_Series_5__GPS___Cellular__44mm_Smartwatch___Used___eBay.png

it would be

httpswww.ebay.comitm255667761599.png

If so, would you tell me how? I would really appreciate it.

Thank you so much,

Tad

Hi Tad, I have improved the script to now allow you to specify output=url on the command line and it will save the filenames as the url instead. This is an optional input now. Example: bash grab_all.sh output=url

I also added an optional input= to allow flexibility in providing different url.txt files.

Thanks! Really helpfull! 🙂

This is brilliant, is there a way to get around the pop-ups / cookie policies you often get on first visit of a website?

Yes, one way that comes to mind is using Puppeteer to simulate a user by closing that by looking for an element that has “Close” for example. Using page.querySelector and page.click, etc you should be able to accomplish this. I could add this at some point to this code when I have time. 🙂

1tps://www.ebay.com/itm/255667761599

grab_all.sh: line 63: node: command not found

2tps://www.tripadvisor.com/Hotel_Review-g609129-d4459053-Reviews-Andaz_Maui_At_Wailea_Resort-Wailea_Maui_Hawaii.html

grab_all.sh: line 63: node: command not found

https://www.purestorage.com/ 3

grab_all.sh: line 63: node: command not found

You probably did not install Node.js